- My Forums

- Tiger Rant

- LSU Recruiting

- SEC Rant

- Saints Talk

- Pelicans Talk

- More Sports Board

- Coaching Changes

- Fantasy Sports

- Golf Board

- Soccer Board

- O-T Lounge

- Tech Board

- Home/Garden Board

- Outdoor Board

- Health/Fitness Board

- Movie/TV Board

- Book Board

- Music Board

- Political Talk

- Money Talk

- Fark Board

- Gaming Board

- Travel Board

- Food/Drink Board

- Ticket Exchange

- TD Help Board

Customize My Forums- View All Forums

- Show Left Links

- Topic Sort Options

- Trending Topics

- Recent Topics

- Active Topics

Started By

Message

Posted on 11/7/22 at 8:05 am to Joshjrn

Assuming these leaks are accurate, Nvidia is going to have a pretty serious pricing problem on its hands with the 4080:

Jensen can say Moore's law is dead all he likes, but a good percentage of his target audience isn't going to be thrilled about paying 72% more money to achieve a 62% performance gain going from the 3080 to the 4080. Even if cards get more expensive, performance per dollar simply has to go up each generation. The only thing that would make this make sense is that Jensen knows supply of 40 series cards will be low, so he's trying to force people into clearing old 30 series supply while also in-house scalping people who are no matter what early adopters.

Full article: Videocardz.com

Jensen can say Moore's law is dead all he likes, but a good percentage of his target audience isn't going to be thrilled about paying 72% more money to achieve a 62% performance gain going from the 3080 to the 4080. Even if cards get more expensive, performance per dollar simply has to go up each generation. The only thing that would make this make sense is that Jensen knows supply of 40 series cards will be low, so he's trying to force people into clearing old 30 series supply while also in-house scalping people who are no matter what early adopters.

Full article: Videocardz.com

This post was edited on 11/7/22 at 8:07 am

Posted on 11/7/22 at 11:51 am to Joshjrn

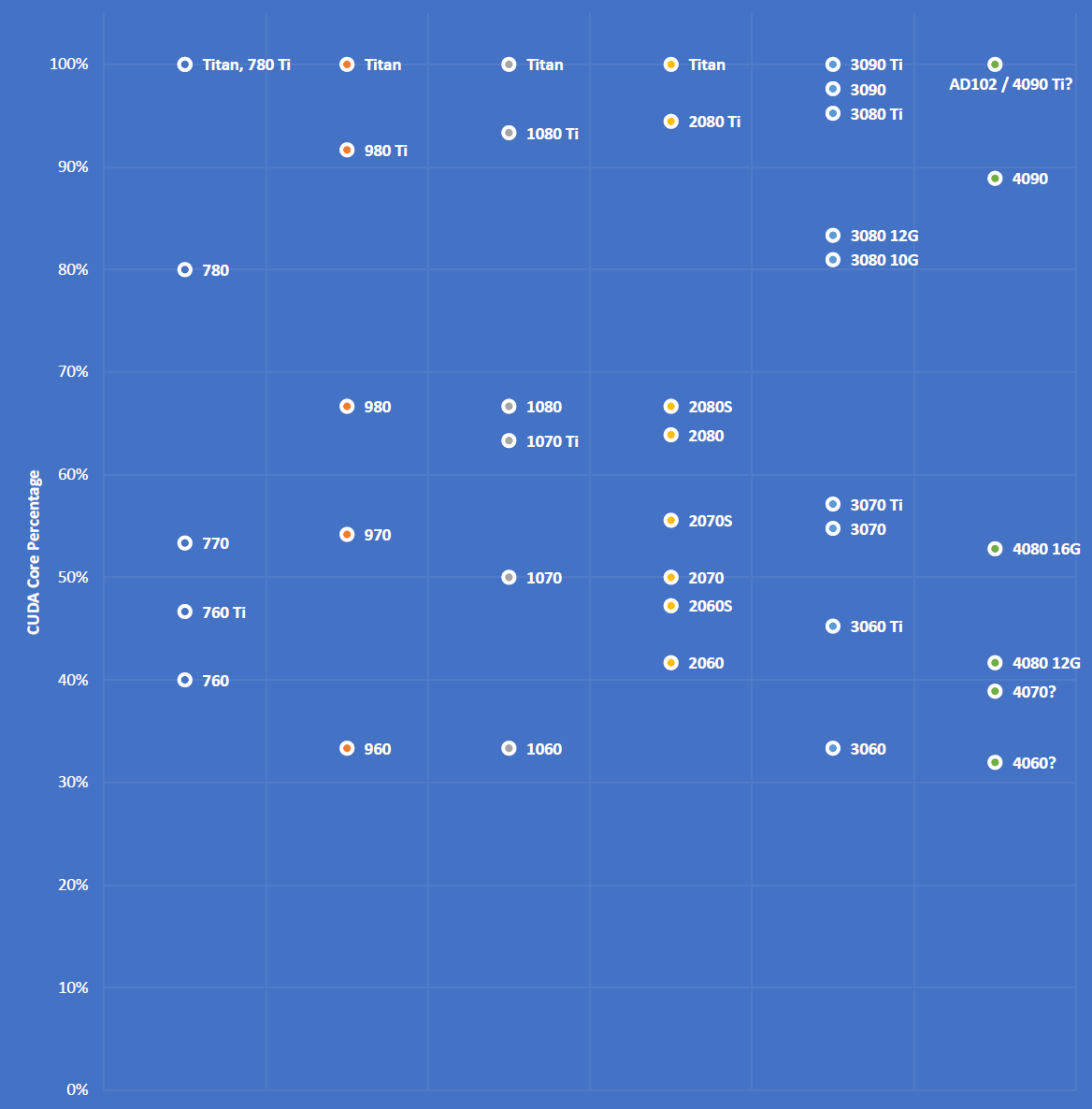

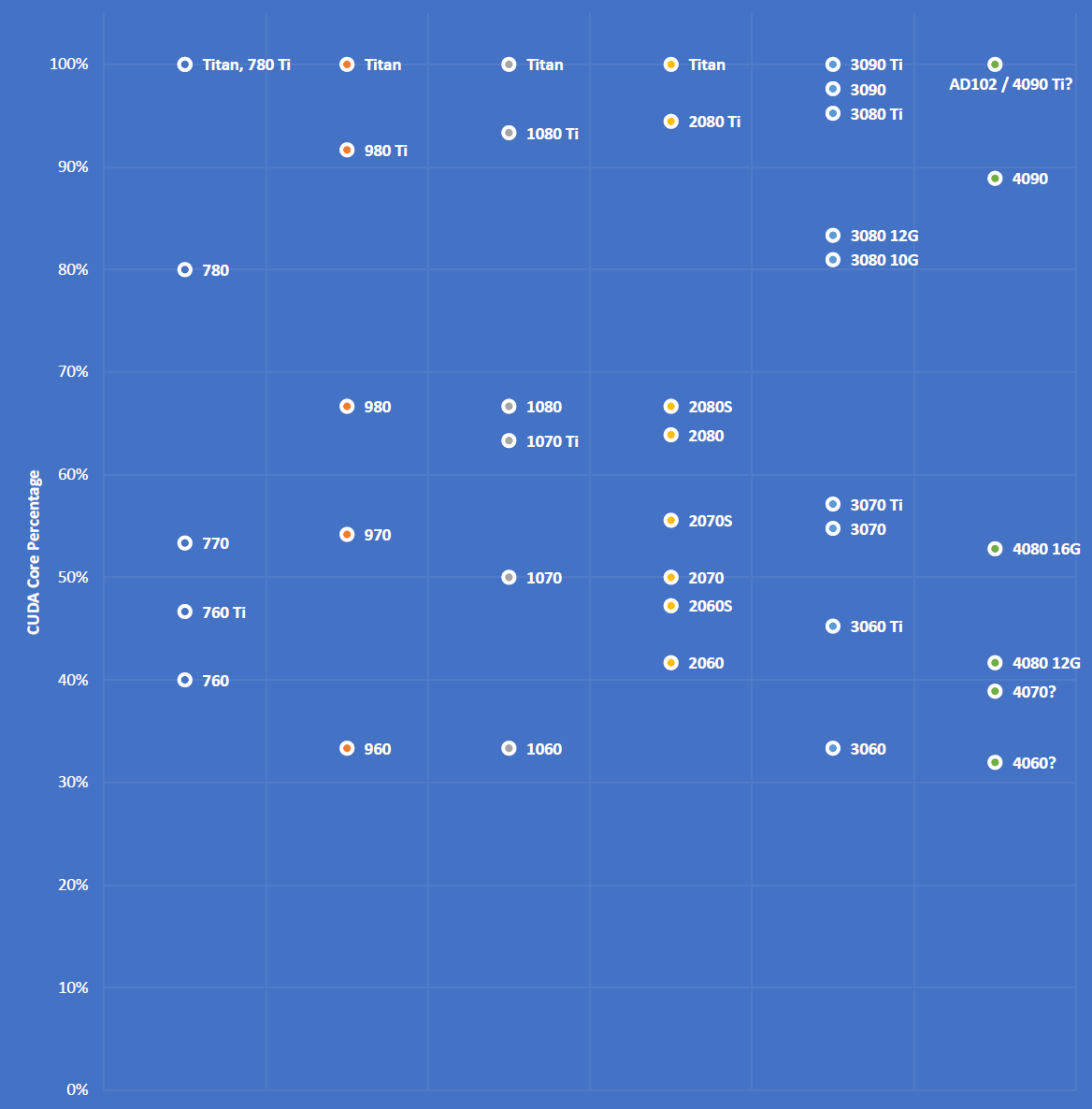

I came across this graphic someone made in the comments section. I think it does a great job of explaining why people are angry about what Nvidia is doing with 40 series naming conventions:

Now, keep in mind, this isn't relative performance. What it's tracking is which SKU uses the entire die, with no cores cut away or disabled (thus 100%), and then where each SKU in that generation measures up from there as a percentage of CUDA cores that are present versus disabled. As a brief explanation, the 4090 has more disabled cores as a percentage of total die than the 3080Ti did. And the 4080 has more disabled cores as a percentage of total die than the 3070 did. That's absolutely insane, and it's pretty clear that Nvidia is looking for the 30 and 40 series to basically coexist as a single line (minus the 3090Ti), later slotting in a 4080Ti and 4090Ti at the 75%ish mark and 100% marks, respectively.

Now, keep in mind, this isn't relative performance. What it's tracking is which SKU uses the entire die, with no cores cut away or disabled (thus 100%), and then where each SKU in that generation measures up from there as a percentage of CUDA cores that are present versus disabled. As a brief explanation, the 4090 has more disabled cores as a percentage of total die than the 3080Ti did. And the 4080 has more disabled cores as a percentage of total die than the 3070 did. That's absolutely insane, and it's pretty clear that Nvidia is looking for the 30 and 40 series to basically coexist as a single line (minus the 3090Ti), later slotting in a 4080Ti and 4090Ti at the 75%ish mark and 100% marks, respectively.

Posted on 11/11/22 at 10:12 am to Joshjrn

Ya, it sold out very quickly. It was an series s/x controller for 35.99.

I edited the post because it was sold out. I just edited and logged out without looking at the thread.

Ya, I use Xbox controllers as well. Action games are terrible if you don't have to aim with kb/m.

I edited the post because it was sold out. I just edited and logged out without looking at the thread.

Ya, I use Xbox controllers as well. Action games are terrible if you don't have to aim with kb/m.

This post was edited on 11/11/22 at 10:20 am

Posted on 11/12/22 at 7:51 am to Joshjrn

4090 pricing is jarringly, absurdly high, but damn, that thing is a monster, even without using the entire die. This is the current top score for Port Royal:

For context, my 3080 just did an 11,783. Of course just at ambient and not with lN2, custom bios, etc. But still, those are crazy single card scores.

ETA: So to ease some of the edge from the LSU game, I played with OCing the frick out of my VRAM. Got my Port Royal score up to 12,088 with my gaming undervolt still in place

For context, my 3080 just did an 11,783. Of course just at ambient and not with lN2, custom bios, etc. But still, those are crazy single card scores.

ETA: So to ease some of the edge from the LSU game, I played with OCing the frick out of my VRAM. Got my Port Royal score up to 12,088 with my gaming undervolt still in place

This post was edited on 11/12/22 at 2:36 pm

Posted on 11/12/22 at 2:35 pm to Joshjrn

Still insane they left out displayport 2.0 or 2.1 and left the old gen that requires DSC like HDMI 2.1.

Makes no sense when Intel and AMD have dp2.1 and are future proof for basically ever.

Hey spend $1600 on this GPU and then use compression to display anything.

Makes no sense when Intel and AMD have dp2.1 and are future proof for basically ever.

Hey spend $1600 on this GPU and then use compression to display anything.

Posted on 11/12/22 at 2:42 pm to UltimateHog

quote:

Still insane they left out displayport 2.0 or 2.1 and left the old gen that requires DSC like HDMI 2.1.

Makes no sense when Intel and AMD have dp2.1 and are future proof for basically ever.

Hey spend $1600 on this GPU and then use compression to display anything.

Certainly would have been a nice feature, but doesn't particularly matter for the time being. I imagine 99.9% of the people who buy a 4090 will upgrade again before they are at all concerned about needing better than 4k/165Hz with DCS and 4k/98Hz without.

Posted on 11/12/22 at 2:49 pm to Joshjrn

I would hope so. Every monitor at CES is DP2.0 or 2.1.

Makes zero sense to leave out such a major thing when your 2 competitors have it.

Makes zero sense to leave out such a major thing when your 2 competitors have it.

Posted on 11/12/22 at 3:04 pm to UltimateHog

I’m sure it saved them a few pennies per unit, or something similarly trite. But kind of like PCIe Gen3/4/5 x16, I just don’t think it’s going to tip the scales for many people this generation. Maybe next.

Posted on 11/15/22 at 2:54 pm to Joshjrn

Speaking of monitors, I just purchased a secondary (24"). My main is 32" and weighs 15 lbs. Does anyone have a recommended dual monitor mount? One that clamps to the desk.

I read some reviews and they seem to be mixed but some are saying they move on the weight of the monitor, even if the specs say its good. I want to be sure that if I get one, its a good one.

I read some reviews and they seem to be mixed but some are saying they move on the weight of the monitor, even if the specs say its good. I want to be sure that if I get one, its a good one.

Posted on 11/15/22 at 3:08 pm to SaintEB

I've almost bought a new one for like a year now.

Waiting for DP2.0 monitors so I'm set for a long time with it being the end all of PC connections.

Still can't believe Nvidia left it out and Intel and AMD didn't.

Such a big big thing to leave out right now and going forward.

Waiting for DP2.0 monitors so I'm set for a long time with it being the end all of PC connections.

Still can't believe Nvidia left it out and Intel and AMD didn't.

Such a big big thing to leave out right now and going forward.

This post was edited on 11/15/22 at 3:10 pm

Posted on 11/15/22 at 3:19 pm to SaintEB

Posted on 11/15/22 at 5:33 pm to SaintEB

quote:

Speaking of monitors, I just purchased a secondary (24"). My main is 32" and weighs 15 lbs. Does anyone have a recommended dual monitor mount? One that clamps to the desk.

I read some reviews and they seem to be mixed but some are saying they move on the weight of the monitor, even if the specs say its good. I want to be sure that if I get one, its a good one.

15lbs is really heavy... for a clamp mount to comfortably hold that weight, you're almost certainly going to do some damage to the desk. So with that said, is there possibly merit to getting a mount that screws in instead of just clamping?

Posted on 11/16/22 at 8:10 am to Joshjrn

quote:

15lbs is really heavy... for a clamp mount to comfortably hold that weight, you're almost certainly going to do some damage to the desk. So with that said, is there possibly merit to getting a mount that screws in instead of just clamping?

So, the ones I looked at have both options. You can drill the desk, I guess, and have a pass through grommet type. There is also support plates that can be used for the clamp type, that would distribute the weight over the plates. My concern is the monitor's arm, itself, moving. I guess i'm pretty confident that I can make the clamp/pass through grommet type stable. I just worry that the monitor won't stay put.

Posted on 11/16/22 at 8:11 am to Devious

quote:

I use this one

Do you have any issues with the monitors sagging/dropping down?

I don't think I will wall mount.

Posted on 11/16/22 at 8:15 am to SaintEB

Posted on 11/16/22 at 8:23 am to hoojy

Posted on 11/16/22 at 1:35 pm to SaintEB

quote:None at all

Do you have any issues with the monitors sagging/dropping down?

Posted on 11/16/22 at 1:45 pm to bluebarracuda

i wish my area was that clean.

and that ceiling fan looks low enough to cut your head off.

and that ceiling fan looks low enough to cut your head off.

This post was edited on 11/16/22 at 1:46 pm

Popular

Back to top

0

0