- My Forums

- Tiger Rant

- LSU Recruiting

- SEC Rant

- Saints Talk

- Pelicans Talk

- More Sports Board

- Fantasy Sports

- Golf Board

- Soccer Board

- O-T Lounge

- Tech Board

- Home/Garden Board

- Outdoor Board

- Health/Fitness Board

- Movie/TV Board

- Book Board

- Music Board

- Political Talk

- Money Talk

- Fark Board

- Gaming Board

- Travel Board

- Food/Drink Board

- Ticket Exchange

- TD Help Board

Customize My Forums- View All Forums

- Show Left Links

- Topic Sort Options

- Trending Topics

- Recent Topics

- Active Topics

Started By

Message

re: PC Discussion - Gaming, Performance and Enthusiasts

Posted on 9/20/22 at 5:44 pm to UltimateHog

Posted on 9/20/22 at 5:44 pm to UltimateHog

I completely agree. Hell, AMD moving into chiplets basically revolutionized the CPU landscape. It's simply a question of whether this generation bears that fruit, or whether it needs a few generations to "age like fine wine" like Zen needed.

I'm pulling for them. Something needs to give, because the Wattage Wars can't keep going like this.

I'm pulling for them. Something needs to give, because the Wattage Wars can't keep going like this.

Posted on 9/20/22 at 11:44 pm to Joshjrn

AMD RDNA 3 touts 50 per cent better perf per watt as rumours hint at 4GHz GPU

Good read on AMD maintaining commitment to power efficiency. Over 50% perf per watt gain with 5nm RDNA3.

Good read on AMD maintaining commitment to power efficiency. Over 50% perf per watt gain with 5nm RDNA3.

quote:

In a blog post titled ‘Advancing Performance-Pet-Watt to Benefit Gamers,’ senior vice president and product technology architect Sam Naffziger takes us on a trip down memory lane, pointing to significant efficiency gains across the last three generations of AMD Radeon graphics cards.

Most recently, Radeon RX 6000 Series GPUs are cited as having delivered a 65 per cent increase in performance-per-watt over RX 5000 Series cards built on the same 7nm process. Firing a shot across the bow of upcoming competition, Naffziger points out “graphics card power has quickly pushed up to and beyond 400 watts.” RTX 4090, as you’ve no doubt heard, is rumoured to ship with a lofty 450W TDP.

What’s of interest to the enthusiast awaiting next-gen hardware is that Naffziger reckons AMD is on track to deliver on its promise of a greater than 50 per cent increase in performance per watt with 5nm RDNA 3.

Promising “top-of-the-line gaming performance” in “cool, quiet, and energy-conscious designs,” AMD recalls building its CPU and GPU architectures from the ground-up, and a lot of those early bets have begun to pay off. RDNA 3’s efficiency is made possible, says AMD, through refinement of the adaptive power management featured in RDNA 2, as well as a new generation of AMD Infinity Cache.

Efficiency gains typically lend themselves to higher frequencies, so just how quick might a next-gen AMD GPU operate? According to the latest Twitter leaks from @9550pro, we could be looking at GPUs hitting almost 4GHz.

This post was edited on 9/20/22 at 11:50 pm

Posted on 9/21/22 at 12:17 am to UltimateHog

Posted on 9/21/22 at 8:11 am to UltimateHog

quote:

Anotha one.

About god damned time...

Posted on 9/21/22 at 8:36 am to thunderbird1100

quote:

RTX 4090 is $1600

RTX 4080 16GB $1200

RTX 4080 12GB $900

Wow, talk abut continuing to move the market in a hilarious direction, especially that 4080 now being in 2 tiers and a $300 difference in price (why not call the 16GB version the 4080Ti)? RTX 3080 was $700 at launch.

Memory isn't the only difference between the 2 4080s. The 12gb one is basically a 4070. Yet they're selling it for $400 more than the 3070 launched at. And there's no crypto boom or pandemic going on. WTF is Nvidia doing?!

Posted on 9/21/22 at 11:45 am to MetroAtlantaGatorFan

Yep, I went on an in depth rant a page or two ago:

quote:

Historically, the xx90 and xx80 use the same die, with the xx80 using a cut down version, and this is generally the 102 die. The gaming performance is usually close, with the big difference being VRAM for production. Then, the xx70 is generally one step down, using the 103 die.

Here, the 4090 is using 102. The 4080 16gb is using 103. And the 4080 12gb is using 104. So Nvidia basically created a massive gaming performance separation between the 4090 and 4080 16gb by using different dies and in spite of that, nearly doubled the MSRP of the xx80 generation over generation. And then in what I consider the most insulting move, shifted down-die again with the 4080 12gb. So instead of the xx70 being one full die removed from the xx90, the xx80 12gb is two full dies removed from the xx90. That's insanity.

Posted on 9/21/22 at 2:02 pm to Joshjrn

Yeah I initially thought the only difference was 4gb since they've done that previously with the 1060 and 2060.

Posted on 9/21/22 at 10:29 pm to bamabenny

quote:

What’s the 3080 die?

Both the 3090 and 3080 used a GA102 die. Basically, if the silicon quality was good enough, it became a 3090. But if small areas of the die had defects, those areas would be disabled and it would be used in a 3080. That’s why the gaming performance between a 3090 and 3080 wasn’t all that significant. 10% or so performance boost at over double the price.

Now we are talking about a 4090 potentially being 75%+ more powerful at less than 50% price increase compared to a 4080 16gb. It’s fricked.

This post was edited on 9/21/22 at 10:30 pm

Posted on 9/23/22 at 8:12 am to Joshjrn

Something tells me AMD might be willing to deal a big blow to Nvidia here. I think Nvidia is a bit tone deaf to the current state of people's pockets over the last year+. Essentially rebadging a 70-series $500-ish card as a 12 GB 4080 for $900 is a gigantic slap in the face to people who buy mid-range GPUs.

AMD can also just join the profit party or take a bit of an opportunity to take over a huge part of market share.

If AMD can put something out that beats a 4080 12 GB for $150-$200 less they will wi na lot of people over.

Or they can also make a 700 series class card $800-$900 similar to NVidia, have their 800 series class card $1100-$1200 and a 900 series class card $1500-$1600 and just make us all if the performance is similar (or better) to Nvidia's cards at those prices.

if the performance is similar (or better) to Nvidia's cards at those prices.

Question is do they want the profits or the market share. The market share is there for the taking in a big way as Nvidia clears out 30 series stock over the next year overpricing their 40 series cards for now, well specifically the 2 different "4080s"

AMD can also just join the profit party or take a bit of an opportunity to take over a huge part of market share.

If AMD can put something out that beats a 4080 12 GB for $150-$200 less they will wi na lot of people over.

Or they can also make a 700 series class card $800-$900 similar to NVidia, have their 800 series class card $1100-$1200 and a 900 series class card $1500-$1600 and just make us all

Question is do they want the profits or the market share. The market share is there for the taking in a big way as Nvidia clears out 30 series stock over the next year overpricing their 40 series cards for now, well specifically the 2 different "4080s"

This post was edited on 9/23/22 at 8:23 am

Posted on 9/23/22 at 8:22 am to thunderbird1100

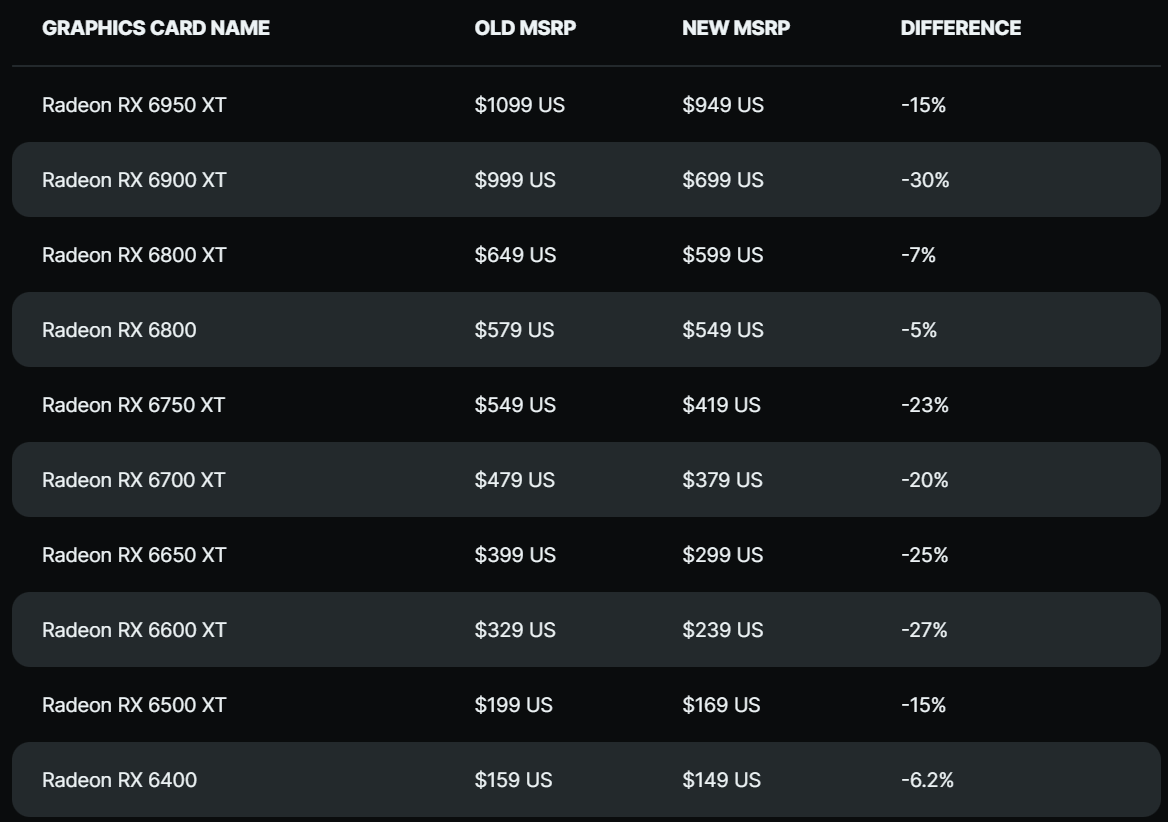

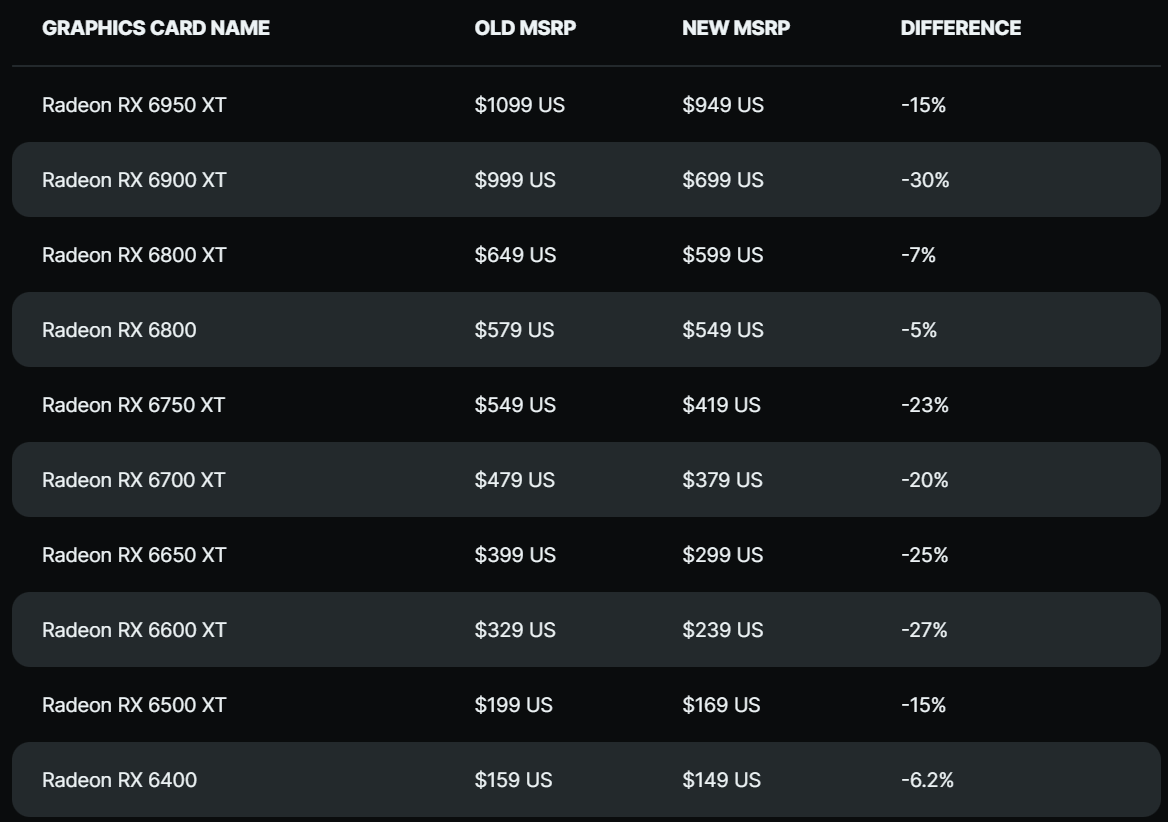

AMD dropped the prices of their 6000 series cards

Posted on 9/23/22 at 8:28 am to finchmeister08

Frankly if I was building a new PC right now, I'd probably go after a 6750XT or a 6800 for my gaming needs (or wait for AMD RX 7000 series).

I think my 2070S might be the last Nvidia card I buy for a while.

I think my 2070S might be the last Nvidia card I buy for a while.

This post was edited on 9/23/22 at 8:31 am

Posted on 9/23/22 at 8:32 am to finchmeister08

I've seen a few 6900 XTs over the past month or two for $700 new. That $949 price for the 6950 XT just makes no sense still considering the very small bump (5-10%) in performance from a 6900 XT. Should be an $800 card at most.

$700 or less is kind of a bargain for a 6900 XT for now until the new cards hit. $600 and under for a 6800 XT is also very appealing.

I like to see the 6700 XT at $379 now too. I remember getting my 5700 XT for $350 brand new when they came out with a $50 off thing at microcenter, still have that 5700 XT. I would consider a 6700 XT for like $350 too but now I can only get like $200-$225 for my 5700 XT looks like now. My next card will be a 4k card though since i will be getting a 4k projector as next big purchase. 5700 XT is not enough in that regard, and the 6700 XT is closer but still not quite there either to game in 4k in some cases. I would imagine something like a 7700 XT should not have much a sweat.

$700 or less is kind of a bargain for a 6900 XT for now until the new cards hit. $600 and under for a 6800 XT is also very appealing.

I like to see the 6700 XT at $379 now too. I remember getting my 5700 XT for $350 brand new when they came out with a $50 off thing at microcenter, still have that 5700 XT. I would consider a 6700 XT for like $350 too but now I can only get like $200-$225 for my 5700 XT looks like now. My next card will be a 4k card though since i will be getting a 4k projector as next big purchase. 5700 XT is not enough in that regard, and the 6700 XT is closer but still not quite there either to game in 4k in some cases. I would imagine something like a 7700 XT should not have much a sweat.

Posted on 9/23/22 at 10:15 am to thunderbird1100

quote:

Something tells me AMD might be willing to deal a big blow to Nvidia here. I think Nvidia is a bit tone deaf to the current state of people's pockets over the last year+. Essentially rebadging a 70-series $500-ish card as a 12 GB 4080 for $900 is a gigantic slap in the face to people who buy mid-range GPUs.

I don’t think it’s tone deafness as much as it is desperation in service to the shareholders. In the last twelve months, Nvidia’s stock is down 65%, from a high of $346 to a low of $122. I think Huang knew exactly how people would react, but didn’t see any other option that would keep the shareholders off his back in the short term.

Posted on 9/23/22 at 2:00 pm to thunderbird1100

I hope so. AMD has closed the performance gap and actually beats Nvidia now in efficiency.

Cheapest Per PCPP

6600: $250 (67 FPS at 1080p ultra)

3060: $370 (70 FPS at 1080p ultra)

6600 XT: $330 (78 FPS at 1080p ultra)

6650 XT: $298 (80 FPS at 1080p ultra)

3060 Ti: $493 (70 FPS at 1440p ultra)

6700 XT: $355 (71 FPS at 1440p ultra)

3070: $500 (78 FPS at 1440p ultra)

6750 XT: $420 (75 FPS at 1440p ultra)

6900 XT: $700 (63 FPS at 4k ultra)

3090: $960 (69 FPS at 4k ultra)

6950 XT: $990 (70 FPS at 4k ultra)

3090 Ti: $1100 (76 FPS at 4k ultra)

Cheapest Per PCPP

6600: $250 (67 FPS at 1080p ultra)

3060: $370 (70 FPS at 1080p ultra)

6600 XT: $330 (78 FPS at 1080p ultra)

6650 XT: $298 (80 FPS at 1080p ultra)

3060 Ti: $493 (70 FPS at 1440p ultra)

6700 XT: $355 (71 FPS at 1440p ultra)

3070: $500 (78 FPS at 1440p ultra)

6750 XT: $420 (75 FPS at 1440p ultra)

6900 XT: $700 (63 FPS at 4k ultra)

3090: $960 (69 FPS at 4k ultra)

6950 XT: $990 (70 FPS at 4k ultra)

3090 Ti: $1100 (76 FPS at 4k ultra)

Posted on 9/24/22 at 11:40 pm to MetroAtlantaGatorFan

Nov. 3rd will be interesting. If the price is underwhelming, I'll grab a 3080 off ebay for cheap.

Posted on 9/26/22 at 3:41 pm to hoojy

Posted on 9/26/22 at 8:47 pm to hoojy

They're legit, and pretty spot on. If you're building new, go for it, IF you have the money and you want something now.

But personally, I'd wait for the cheaper B650 boards.

I did just that for my 5600x ITX build, and the B550 ITX board I got had superior VRMs and was cheaper than the more expensive X570 ITX boards.

Plus, you add the cost in for DDR5, and well yeah... you get what you pay for.

The performance gains are significant, but it comes at the cost of more spending and higher temperatures.

But personally, I'd wait for the cheaper B650 boards.

I did just that for my 5600x ITX build, and the B550 ITX board I got had superior VRMs and was cheaper than the more expensive X570 ITX boards.

Plus, you add the cost in for DDR5, and well yeah... you get what you pay for.

The performance gains are significant, but it comes at the cost of more spending and higher temperatures.

Posted on 9/27/22 at 8:20 am to hoojy

The Ryzen 7xxx reviews are telling me to just upgrade my Ryzen 3600 to a $150 5600 or 5800X3D if the price drops some there. 5800X3D is a helluva gaming chip and it wouldnt require me to go out and buy a new mobo and DDR5 RAM and it's seemingly just as fast in games as any new Ryzen 7xxx chip (until they released 3d cache version of those next year).

This post was edited on 9/27/22 at 8:21 am

Posted on 9/27/22 at 8:52 am to thunderbird1100

quote:

The Ryzen 7xxx reviews are telling me to just upgrade my Ryzen 3600 to a $150 5600 or 5800X3D if the price drops some there. 5800X3D is a helluva gaming chip and it wouldnt require me to go out and buy a new mobo and DDR5 RAM and it's seemingly just as fast in games as any new Ryzen 7xxx chip (until they released 3d cache version of those next year).

If you have a 3600, and want to upgrade, then yes - pretty much.

Going to a 5600, 5600x or a 5800X3D (heck even a 5700x), will extend your build by 4-5 years IMO.

I'd hold off on 7XXX unless you are building a brand new workstation and need the chipset features offered by X670/X670E - honestly, B650/B650E should be more than enough for 95% of people.

Popular

Back to top

1

1