- My Forums

- Tiger Rant

- LSU Recruiting

- SEC Rant

- Saints Talk

- Pelicans Talk

- More Sports Board

- Fantasy Sports

- Golf Board

- Soccer Board

- O-T Lounge

- Tech Board

- Home/Garden Board

- Outdoor Board

- Health/Fitness Board

- Movie/TV Board

- Book Board

- Music Board

- Political Talk

- Money Talk

- Fark Board

- Gaming Board

- Travel Board

- Food/Drink Board

- Ticket Exchange

- TD Help Board

Customize My Forums- View All Forums

- Show Left Links

- Topic Sort Options

- Trending Topics

- Recent Topics

- Active Topics

Started By

Message

re: OFFICIAL: Sam Altman to return as Open AI CEO w/New board

Posted on 11/22/23 at 9:14 am to tiggerthetooth

Posted on 11/22/23 at 9:14 am to tiggerthetooth

Supposedly D Angelo was the main proponent of forcing Sam out, I’m surprised he’s back on the board. He’s CEO of Quora and OpenAI released a direct competitor to one of Quora’s new products a few weeks ago without warning, which prompted D Angelo to convince the board to force Sam out

Posted on 11/22/23 at 9:38 am to tiggerthetooth

quote:

The Ilya side wants to decelerate and do more "studies" to make sure its all "safe". To make sure it doesn't hurt feelings and mirrors Ilya's sense of morality.

Ilya would hold back world changing technologies that could save thousands of lives just to make AI a little "less racist" or less anti-LGBTQ.

One sees AI as a tool to benefit the world, the others want some sort of god-like being.

Let me preface this by saying I don’t know enough about the personalities involved to address your portrayal of these specific individuals.

That being said, I’m a little surprised to see the entire concept of “AI safety” being mocked in this thread. AI safety goes way beyond SJW concerns. Once you hit AGI, ASI becomes a near certainty. And when you’re dealing with a computer system that’s way smarter than any human, you better make damn sure you programmed its goals correctly.

It just feels a little disingenuous to lump all of the serious researchers working on AI safety (who, BTW, are few and far between compared to the people working on making more advanced AIs) in with folks who are just worried about offending people.

Posted on 11/22/23 at 10:11 am to Upperdecker

quote:

Supposedly D Angelo was the main proponent of forcing Sam out, I’m surprised he’s back on the board. He’s CEO of Quora and OpenAI released a direct competitor to one of Quora’s new products a few weeks ago without warning, which prompted D Angelo to convince the board to force Sam out

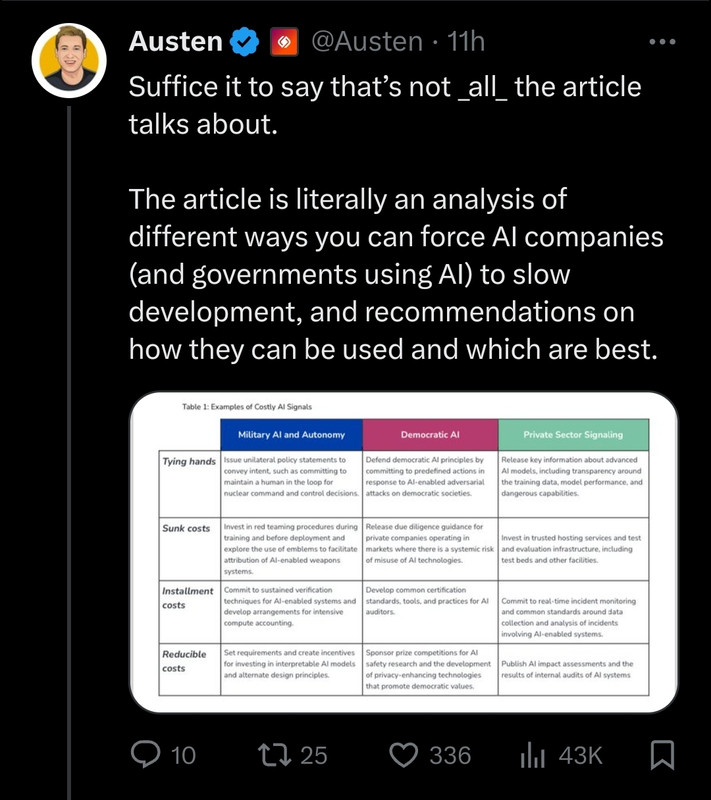

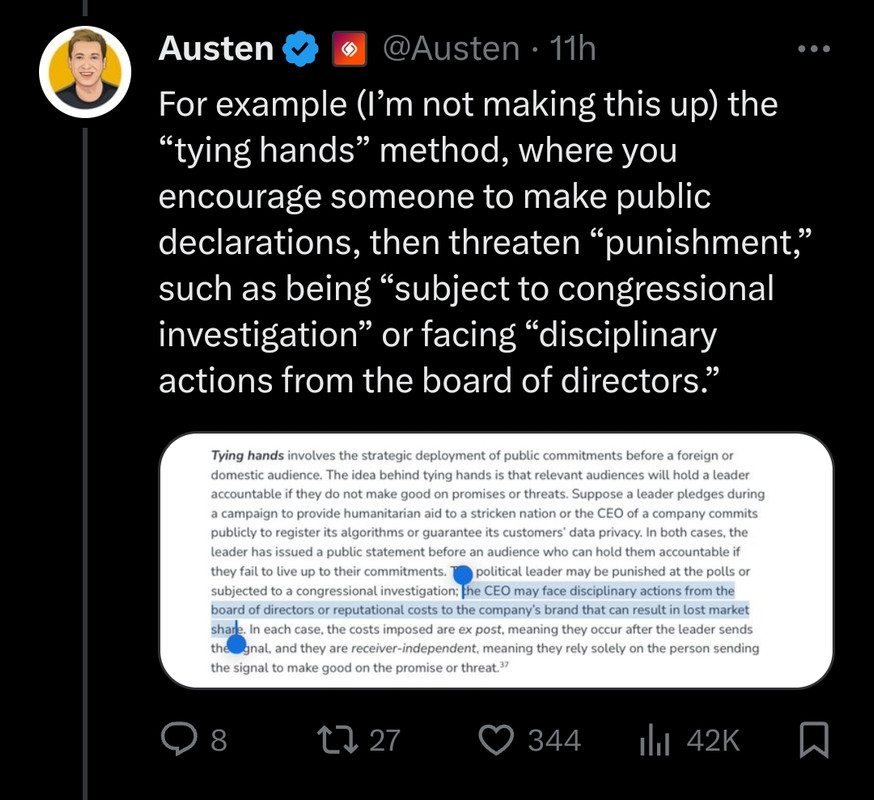

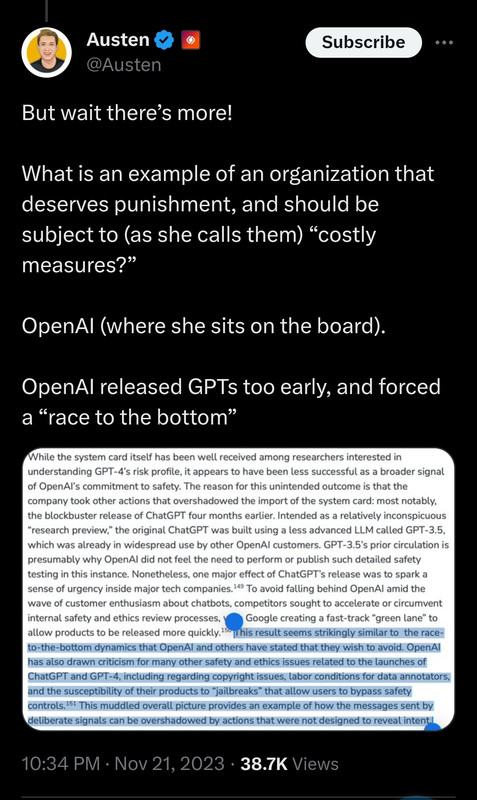

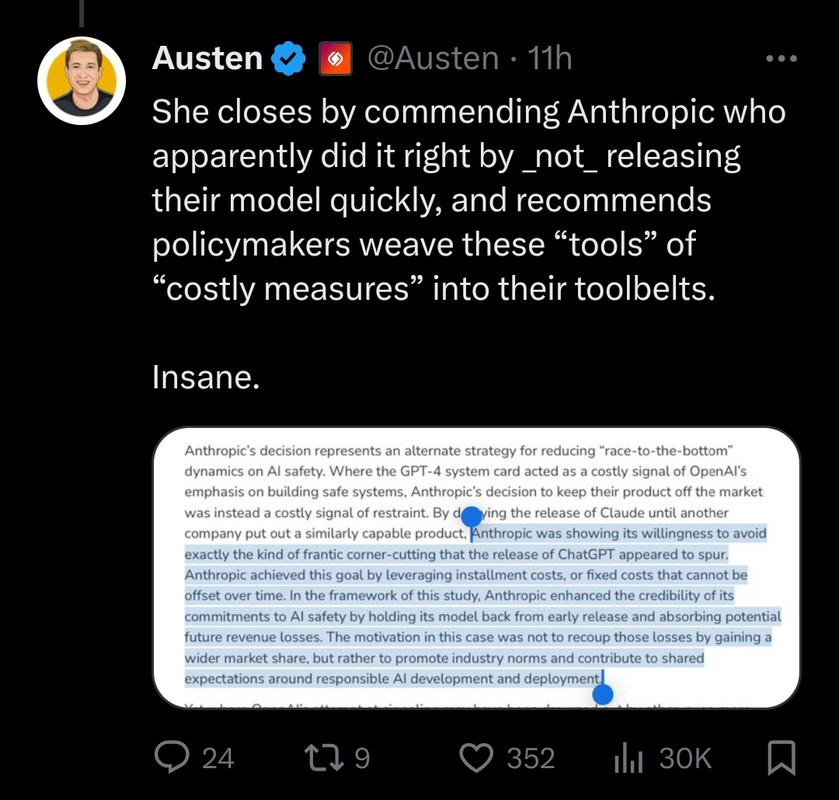

Supposedly Helen Toner, the UN program recruit/product, played her role.

X

>

>

Posted on 11/22/23 at 10:16 am to lostinbr

quote:

That being said, I’m a little surprised to see the entire concept of “AI safety” being mocked in this thread. AI safety goes way beyond SJW concerns. Once you hit AGI, ASI becomes a near certainty. And when you’re dealing with a computer system that’s way smarter than any human, you better make damn sure you programmed its goals correctly.

Literally ZERO evidence to support this is a realistic outcome. Comes off as someone who watched the terminator one too many times. It's pure scifi doomerism and this doomer thought is enabling bad actors to seize control of AI progress under the veil of "ethics concerns". Never-mind there's absolutely nothing about them that makes them more ethical other than a perceived superior ethics understanding based on their academic background.

Its another inside-outside game where activists on the inside are causing trouble to steer the power of the technology into the hands of centralizing bureaucrats who think they have to dictate how the power is used. Same people that constantly "fear" the end of democracy.

This post was edited on 11/22/23 at 10:22 am

Posted on 11/22/23 at 10:22 am to tiggerthetooth

quote:

Literally ZERO evidence to support this is a realistic outcome. Comes off as someone who watched the terminator one too many times.

Zero evidence to support what as a realistic outcome? ASI? Because that’s the only actual outcome I suggested.

Saying AGI will almost certainly lead to ASI makes me sound like someone who has watched Terminator one too many times? Come on, man.

Posted on 11/22/23 at 10:23 am to lostinbr

quote:

Zero evidence to support what as a realistic outcome? ASI? Because that’s the only actual outcome I suggested.

No evidence of what ASI even is. It's only discussed but there's no clear definition of what it is or how to build it. Only assumptions.

Posted on 11/22/23 at 10:31 am to lostinbr

quote:

That being said, I’m a little surprised to see the entire concept of “AI safety” being mocked in this thread. AI safety goes way beyond SJW concerns. Once you hit AGI, ASI becomes a near certainty. And when you’re dealing with a computer system that’s way smarter than any human, you better make damn sure you programmed its goals correctly.

I personally think it's a very legitimate concern. Not necessarily that AI will eventually become fully sentient, but that it almost certainly will be used at some point for nefarious purposes if some safeguards are not considered. Infosec researchers are already saying that phishing e-mails could get very difficult to identify if the usual hallmarks (grammar mistakes, misspellings, language translation semantics, etc) are made irrelevant by generative AI.

Posted on 11/22/23 at 10:39 am to tiggerthetooth

quote:

No evidence of what ASI even is. It's only discussed but there's no clear definition of what it is or how to build it. Only assumptions.

I mean people are still debating what AGI “even is.” Yes, the definition has narrowed - but only because recent progress in the field has made it much more relevant.

So sure, ASI seems like a nebulous concept right now in the same way that “AGI” or “strong AI” were nebulous terms (if they even existed; I’m not sure) 50 years ago.

You don’t have to be afraid of Skynet to think that self-improving AI might turn into a net negative for our species, if not properly oriented.

Note that I said might turn into a net negative, not will.

Popular

Back to top

1

1