- My Forums

- Tiger Rant

- LSU Recruiting

- SEC Rant

- Saints Talk

- Pelicans Talk

- More Sports Board

- Coaching Changes

- Fantasy Sports

- Golf Board

- Soccer Board

- O-T Lounge

- Tech Board

- Home/Garden Board

- Outdoor Board

- Health/Fitness Board

- Movie/TV Board

- Book Board

- Music Board

- Political Talk

- Money Talk

- Fark Board

- Gaming Board

- Travel Board

- Food/Drink Board

- Ticket Exchange

- TD Help Board

Customize My Forums- View All Forums

- Show Left Links

- Topic Sort Options

- Trending Topics

- Recent Topics

- Active Topics

Started By

Message

re: PC Discussion - Gaming, Performance and Enthusiasts

Posted on 1/4/23 at 4:24 am to Joshjrn

Posted on 1/4/23 at 4:24 am to Joshjrn

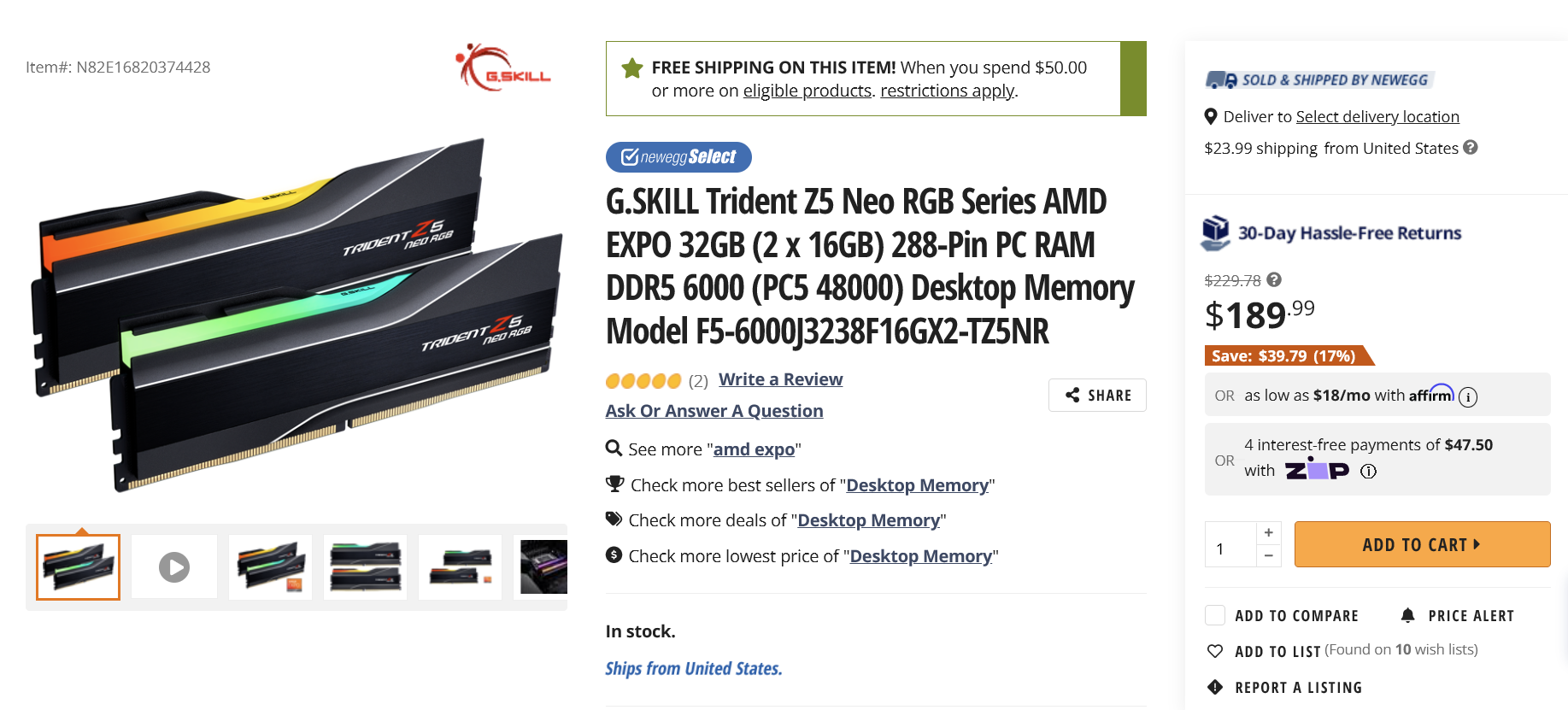

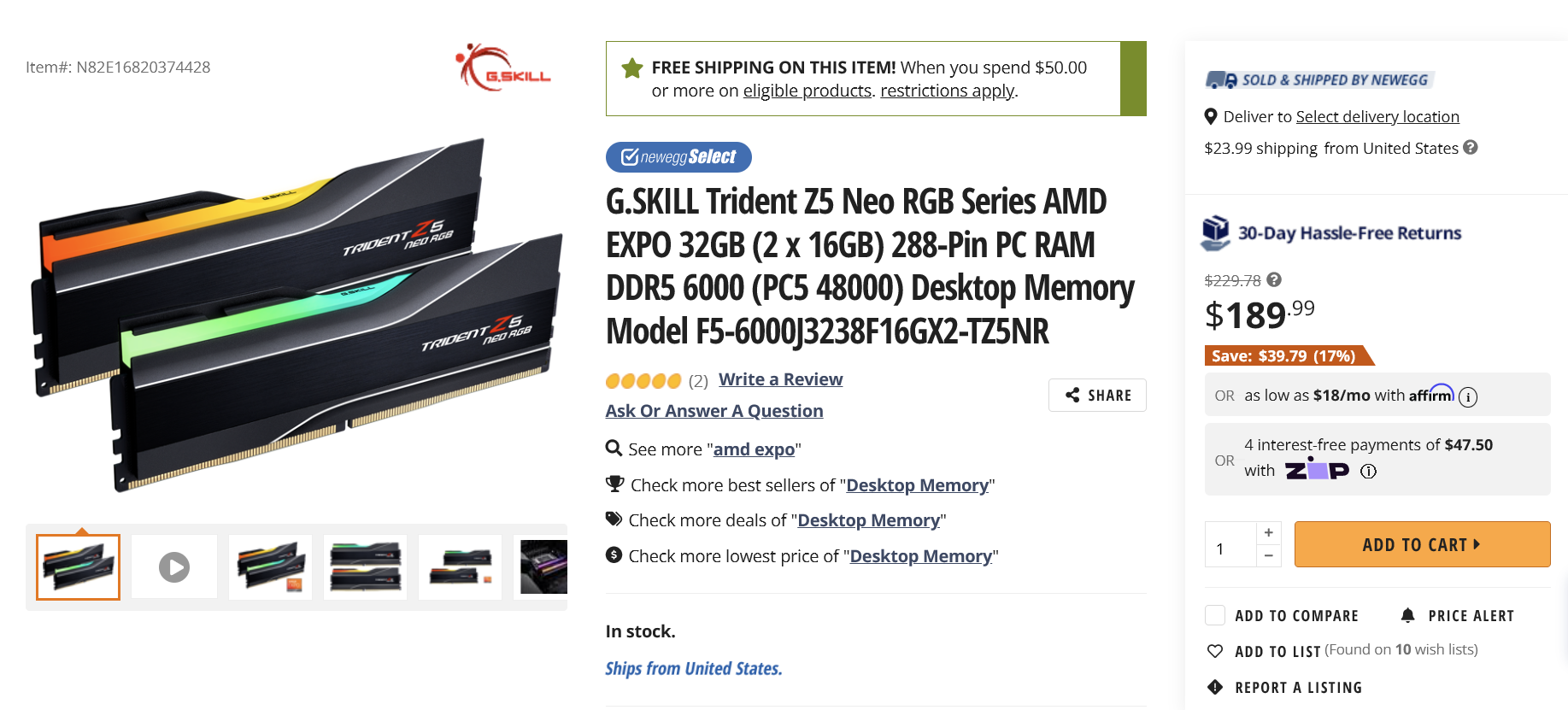

Alright lets find some ram at 4am.

DDR5-6000 we know is the Zen 4 sweet spot per AMD and their new EXPO xmp rival. 6200 is tough to hit stable and not worthwhile at all.

I prefer CL anyways so lets exclusively look at CL32 only kits that just came out a few months back besting the previous CL36.

I'm no a frills person so the best for the price would appear to be the GSkill Flare X5 EXPO 32GB at $168.

Or $20 more just to have it and Z series >

Well that was fast I guess G Skill and like OLOy, team group are the only ones with CL32 kits still.

We'll go Z5 Neo CL32 6000 for the money.

DDR5-6000 we know is the Zen 4 sweet spot per AMD and their new EXPO xmp rival. 6200 is tough to hit stable and not worthwhile at all.

I prefer CL anyways so lets exclusively look at CL32 only kits that just came out a few months back besting the previous CL36.

I'm no a frills person so the best for the price would appear to be the GSkill Flare X5 EXPO 32GB at $168.

Or $20 more just to have it and Z series >

Well that was fast I guess G Skill and like OLOy, team group are the only ones with CL32 kits still.

We'll go Z5 Neo CL32 6000 for the money.

This post was edited on 1/4/23 at 4:35 am

Posted on 1/4/23 at 7:50 am to boXerrumble

quote:

I'd legit consider the 4070ti if they made a 2-2.2 slot variant, unfortunately that doesn't look like its happening.

Not sure about your exact measurements, but the Ventus looks like it's about 2.25 slots: LINK

Posted on 1/4/23 at 8:11 am to Joshjrn

quote:

Is that marketed directly to content streamers or something? Because yeah, that looks like a ton of extra weight for very little payoff.

I feel like content creators and possibly excel users would be the only beneficiaries

An interesting concept I guess, but I would much rather just carry around a portable monitor and a lighter weight laptop

Posted on 1/4/23 at 8:26 am to bluebarracuda

Posted on 1/4/23 at 5:18 pm to boXerrumble

quote:

boXerrumble

Nvidia has jumped the fricking shark with these prices. At this point, the only cards that actually makes sense to me this generation is the 4090, and that's only because it's the best of the best, and that comes with a price. After that, the 7900xtx isn't insultingly bad, which is actually high praise this generation.

Everything else? frick 'em. Buy something from last gen deeply discounted or just wait on a gpu purchase.

Posted on 1/4/23 at 8:59 pm to Joshjrn

These 7040 mobile chips seem like beasts. Using Xilinx AI from their acquisition.

Posted on 1/4/23 at 9:58 pm to UltimateHog

These come out next month and I see no pricing? What?

Going 7900X3D either way. That extra 4MB of L3 isn't worth it if you don't need even more cores.

This post was edited on 1/4/23 at 10:05 pm

Posted on 1/4/23 at 10:11 pm to UltimateHog

It’s interesting to me that AMD has increased clock speed up the stack this generation, which is quite different from last gen. Can’t say that I love it. Most people have a use for increased clock speeds, but few people need more than 8/16 cores/threads. Making people pay for cores they don’t need to get clocks they do is a bit meh. But with that said, from a financial perspective, I get it.

Posted on 1/4/23 at 10:32 pm to Joshjrn

Yeah I'm very curious to see how much OC room they have with the boost clocks already being at 5.6 and 5.7 for the top 2 chips. Surely not much more than 100-200MHz. Maybe a golden one can do 6GHz.

With how insanely well 3DV scales with clocks that's going to be quite the huge jump.

Looks like we'll easily see +30% gains in gaming performance versus the 5800X3D. Awesome.

With how insanely well 3DV scales with clocks that's going to be quite the huge jump.

Looks like we'll easily see +30% gains in gaming performance versus the 5800X3D. Awesome.

Posted on 1/5/23 at 11:01 am to UltimateHog

I dont understand why they limited the 7800X3D so much at only 5.0ghz compared to 5.6/5.7 for 7900/7950X3D.

Then they used the 7800X3D for gaming benchmarks and not what should be faster 7900/7950X3D which was interesting. the 7900/7950X3D boost to the same frequencies as their Non-3D counterparts while the 7700X boost to 5.4 and the 7800X3D only boosts to 5.0ghz?

Wonder if they are trying to push gamers up to the higher price tiers for best performance limiting the 5800X3D to only 5.0ghz boost.

Then they used the 7800X3D for gaming benchmarks and not what should be faster 7900/7950X3D which was interesting. the 7900/7950X3D boost to the same frequencies as their Non-3D counterparts while the 7700X boost to 5.4 and the 7800X3D only boosts to 5.0ghz?

Wonder if they are trying to push gamers up to the higher price tiers for best performance limiting the 5800X3D to only 5.0ghz boost.

This post was edited on 1/5/23 at 11:03 am

Posted on 1/5/23 at 11:05 am to thunderbird1100

Looks like the higher boost on the 7900 and 799503d is gonna be for the chiplet that doesn't have the cache.

guru3d

quote:

The Ryzen 7 7800X3D has a maximum clock speed of 5.0 GHz, while the Ryzen 7 7700X has a maximum clock speed of 5.4 GHz. The Ryzen 9's high turbo is because the cache chip is carried by only one chiplet.

guru3d

Posted on 1/5/23 at 5:14 pm to hoojy

Well that's interesting...

I wonder if the chip will have logic to intelligently switch between chiplets based on whether the task needs more cache or would be better served by higher clock.

I wonder if the chip will have logic to intelligently switch between chiplets based on whether the task needs more cache or would be better served by higher clock.

Posted on 1/6/23 at 1:32 am to thunderbird1100

quote:

Then they used the 7800X3D for gaming benchmarks and not what should be faster 7900/7950X3D which was interesting.

This is because they market the 7800X3D as the ultimate gaming cpu for the money and the direct replacement for the 5800X3D.

The 2 new larger SKUs are geared just as much at content creators as gaming with 12 and 16 cores.

7900X3D is going to be the sweet spot in terms of cores, cache size (1 less MB per L2 cache per core) and clocks.

I'm just mad we didn't get a price for something that releases next month. I'd like to have my case, ram, mobo ordered soon and just be waiting on the CPU. Oh and the SSD but where the hell are the PCIe5 SSD's at? First it was late last year drop, then early this and showcases at CES. I need to go back and see if there were new ones at CES. I want to do it all in one swoop and not have to swap OS and everything else to a new SSD just a month or two later.

Ah so found an MSI demo at CES, these are releasing Q2. At least something concrete.

This post was edited on 1/6/23 at 2:18 am

Posted on 1/6/23 at 5:36 pm to UltimateHog

It's a shame that there haven't been marked gains on random read/write over the last couple of generations. Most users don't really see anything from gains in sequential read/write, even massive gains. And games couldn't care less about sequential.

On CDM, that drive has 4x the sequential read/write of my Gen 3 NVME, but only twice the random Q32T16 performance and nearly identical random Q1T1 performance. Now, for professionals who move massive video files around all day? That 4x performance is incredible. But most people rarely, if ever, do that. They likely won't even see noticeable gains, much less gains that justify the current price tags.

On CDM, that drive has 4x the sequential read/write of my Gen 3 NVME, but only twice the random Q32T16 performance and nearly identical random Q1T1 performance. Now, for professionals who move massive video files around all day? That 4x performance is incredible. But most people rarely, if ever, do that. They likely won't even see noticeable gains, much less gains that justify the current price tags.

Posted on 1/6/23 at 6:05 pm to Joshjrn

I just wish I could buy it now, but they keep delaying them. First it was Nov, then CES launch, now Q2.

Posted on 1/8/23 at 8:58 pm to Joshjrn

Posted on 1/9/23 at 9:08 am to Joshjrn

Debating on whether to get a new gen 3d chip or just get a 5600. Will wait until black friday to decide.

The GPU prices are gonna have to come waaaaaaaay down. The 6xxx series is cheap, but I do want some ray tracing performance. I'm part of the problem, I guess.

The GPU prices are gonna have to come waaaaaaaay down. The 6xxx series is cheap, but I do want some ray tracing performance. I'm part of the problem, I guess.

Posted on 1/9/23 at 10:15 am to hoojy

Watching a lot of youtube previews on new 4000 series laptops at CES and it seems a ton of them have strangely very low TGP ratings.

Like saw something for a budget $999 starting gaming laptop with a 4050 and 4060 option and they said the GPU (presumably 4050 option) is rated at 35w? Seems crazy low for a budget full size gaming laptop. These have had 80-115w GPUs in the past in those chassis no problem. The 4050 is rated up to 115w anyways. This wasnt a thin and light either. Something equivalent to like a TUF15.

Also saw a premium thin gaming laptop they said would be limited to like 65w but still offered up to a 4070 or something. Seems totally pointless.

Is this an nvidia thing or whats going on there?

Like saw something for a budget $999 starting gaming laptop with a 4050 and 4060 option and they said the GPU (presumably 4050 option) is rated at 35w? Seems crazy low for a budget full size gaming laptop. These have had 80-115w GPUs in the past in those chassis no problem. The 4050 is rated up to 115w anyways. This wasnt a thin and light either. Something equivalent to like a TUF15.

Also saw a premium thin gaming laptop they said would be limited to like 65w but still offered up to a 4070 or something. Seems totally pointless.

Is this an nvidia thing or whats going on there?

Posted on 1/9/23 at 10:23 am to thunderbird1100

If you can get equal or more performance for a significant drop in power, you take it all day in a laptop

Posted on 1/9/23 at 10:41 am to bluebarracuda

quote:

If you can get equal or more performance for a significant drop in power, you take it all day in a laptop

You're leaving a ton of performance on the table though if you're talking a budget laptop that typically have 80-115w GPU and suddenly limit to just 35w though. Even if at 35w it's similar performance to a 30 series at 80w seems utterly pointless for a gaming laptop. You want to push as many frames as possible. People arent paying for efficiency in the budget gaming lines, that's what the thin and lights are for. They want the most performance they can get for the price. An 80 watt 4050 would stomp all over a 35w 4050.

This post was edited on 1/9/23 at 10:43 am

Popular

Back to top

0

0